Gemini 3 Pro: A New Era of Intelligence from Google DeepMind

On November 18, 2025, Google CEO Sundar Pichai announced what may be the most significant advancement in AI this year: Gemini 3, described as “our most intelligent model that helps you bring any idea to life.” The release of Gemini 3 Pro marks a watershed moment in the AI landscape, with leaked benchmarks showing it outperforming not just its predecessor Gemini 2.5 Pro, but also top competitors like Claude Sonnet 4.5 and OpenAI’s GPT-5.1.

Read the official announcement from Google

Image source: Google Blog

Image source: Google Blog

Official Announcement Video

The Numbers That Have the AI Community Buzzing

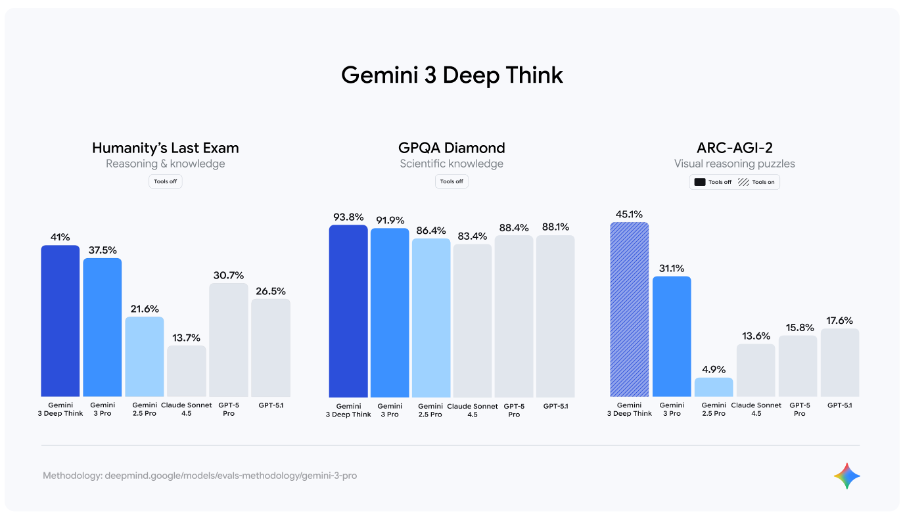

The benchmark leaks have sent shockwaves through the AI community on X (formerly Twitter), with researchers and developers expressing amazement at Gemini 3 Pro’s performance. According to leaked benchmark charts and the official model card published by DeepMind, Gemini 3 Pro demonstrates remarkable superiority across multiple metrics.

The model achieved 91.9% on GPQA Diamond compared to GPT-5.1’s 88.1%, representing a significant leap in graduate-level reasoning capabilities. On AIME 2025, Gemini 3 Pro scored 95.0% versus GPT-5.1’s 94.0%, showcasing its mathematical problem-solving prowess. Perhaps most impressively, the CharXiv Reasoning score of 81.4% towers over GPT-5.1’s 69.5%, creating a nearly 12 percentage point gap that signals a substantial advancement in complex reasoning capabilities.

Gemini 3 Pro benchmark comparison showing superior performance across multiple evaluation metrics

Gemini 3 Pro benchmark comparison showing superior performance across multiple evaluation metrics

Technical Architecture: Built to Go Big

Gemini 3 Pro represents a significant architectural evolution, built on a sparse Mixture-of-Experts (MoE) transformer-based architecture. This design achieves optimal computational efficiency by selectively activating only relevant model parameters for each task, enabling massive scale while maintaining reasonable computational costs. The model intelligently routes queries to the most appropriate expert modules, allowing for dynamic resource allocation that maximizes performance without wasting computation on irrelevant pathways.

Unlike models that bolt on multimodal capabilities as an afterthought, Gemini 3 Pro is natively multimodal from the ground up. The model seamlessly processes text, vision, audio, video, and code as integrated streams of information, more closely mirroring how humans perceive the world. This native multimodal design enables advanced language understanding and generation, sophisticated image analysis, comprehensive speech and sound processing, temporal video understanding, and multi-language programming capabilities all within a single unified architecture.

The model supports an unprecedented 1 million token context window for input with 64K token output capacity, enabling seamless handling of text strings, images, audio, and video files. This massive context window enables entirely new use cases that were previously impossible, including analyzing entire codebases, lengthy legal documents, or full-length films in a single prompt without complex chunking strategies.

Gemini 3 Deep Think: Extended Reasoning Mode

One of the most exciting features announced alongside Gemini 3 Pro is Gemini 3 Deep Think, a specialized reasoning mode that takes AI problem-solving to unprecedented levels. This mode employs an extended reasoning process that allows the model to think longer and deeper on complex problems, showing its reasoning steps transparently while verifying and self-correcting its logic during inference.

Gemini 3 Deep Think demonstrates exceptional performance on challenging reasoning benchmarks

Gemini 3 Deep Think demonstrates exceptional performance on challenging reasoning benchmarks

The benchmark results for Deep Think mode are remarkable, particularly excelling at mathematical reasoning and proof generation, scientific problem-solving requiring multiple steps, complex logical puzzles and analytical challenges, and code debugging with algorithmic optimization. This mode represents Google DeepMind’s answer to OpenAI’s o1 model, but with the added benefit of native multimodal capabilities and transparent reasoning chains that allow users to understand exactly how the model arrived at its conclusions.

Community Reaction: The Hype is Real

The AI community on X has erupted with excitement following the announcement and benchmark leaks. AI researchers are calling the 91.9% score on GPQA Diamond “absolutely insane,” representing a new ceiling for AI reasoning capabilities. Developers who gained early access through Vertex AI report that the multimodal capabilities are unlike anything they’ve used before, calling it a genuine game-changer for production applications. The machine learning community has been particularly impressed by the CharXiv reasoning gap versus GPT-5.1, with many noting that Google DeepMind didn’t just catch up but leaped ahead with genuinely impressive results.

Industry analysts are calling it “the most significant model release of 2025,” with some suggesting it could reset competitive dynamics in the AI space. The combination of superior benchmark performance, native multimodal capabilities, and massive context windows has created a level of excitement rarely seen in the AI community.

What This Means for the AI Landscape

Gemini 3 Pro’s performance sends a clear message that the race for AI supremacy is far from over. The benchmark results will undoubtedly push OpenAI, Anthropic, and other competitors to respond with their own advancements, likely accelerating the pace of innovation across the entire industry. With native multimodal support across text, vision, audio, and video, Gemini 3 Pro raises the bar for what users expect from frontier models, making single-modality models increasingly look like legacy technology.

The combination of superior performance and massive context windows makes Gemini 3 Pro particularly attractive for enterprise applications, enabling complex document analysis, large-scale code understanding and generation, multimodal content creation, and advanced reasoning tasks that were previously impractical or impossible. The sparse MoE architecture suggests Google has found ways to deliver better performance without proportionally increasing computational costs, a crucial factor for widespread adoption that could democratize access to state-of-the-art AI capabilities.

Availability and Access

According to reports from the AI community, Gemini 3 Pro has been added to Vertex AI’s model list, with some users already testing via paid accounts. The model has been spotted on Google’s AI Studio platform, with official availability expected within hours or days of the November 18 announcement. API access is likely to follow Google’s tiered pricing model with different rate limits for various use cases, making it accessible to developers ranging from individual hobbyists to large enterprise customers.

Technical Specifications Summary

| Feature | Specification |

|---|---|

| Architecture | Sparse Mixture-of-Experts Transformer |

| Modalities | Text, Vision, Audio, Video, Code |

| Context Window | 1M tokens (input) |

| Output Capacity | 64K tokens |

| Training Paradigm | Multimodal from scratch |

| Primary Strengths | Reasoning, Multimodal Understanding |

What Makes Gemini 3 Pro Special?

The GPQA Diamond and CharXiv Reasoning scores aren’t just numbers on a leaderboard. They represent genuine advances in how AI models can tackle complex, multi-step problems requiring deep understanding. The model’s performance suggests it has developed new capabilities in breaking down complex questions, maintaining context across extended reasoning chains, and arriving at correct conclusions through systematic analysis rather than pattern matching.

Rather than treating different modalities as separate domains that need to be bridged, Gemini 3 Pro processes them as integrated streams of information. This seamless integration enables the model to understand relationships between text and images, correlate audio with visual information, and comprehend temporal relationships in video content. The result is a system that can handle real-world tasks involving multiple types of information without the friction and limitations of models that handle each modality separately.

The massive 1M token context window isn’t just impressive on paper. It enables practical applications that were previously impossible or required complex chunking strategies that often degraded performance. Developers can now analyze entire codebases to understand architectural patterns, review complete legal contracts without losing context, process full-length videos for detailed analysis, and maintain conversation history across extended interactions that span thousands of messages.

The Road Ahead

Gemini 3 Pro’s release raises several important questions about the future direction of AI development. How will competitors respond to these benchmark results? OpenAI’s GPT-5 and Anthropic’s Claude 5 are likely in development, and this release may accelerate their timelines or push them to enhance their planned capabilities. The expanded capabilities of Gemini 3 Pro could unlock entirely new use cases that we haven’t even imagined yet, similar to how GPT-3 enabled applications that seemed impossible with earlier models.

Google’s competitive pricing strategy will be crucial for adoption, particularly in enterprise environments where cost-effectiveness can make or break deployment decisions. The company’s track record with Vertex AI pricing suggests they understand the importance of making powerful models accessible, but the economics of serving such a capable model at scale remain to be seen. Perhaps most intriguingly, if the Pro version delivers these results, what might an Ultra version achieve? The naming convention suggests that Google DeepMind has even more powerful models in development.

Key Takeaways

Gemini 3 Pro outperforms GPT-5.1 and Claude 4.5 across multiple key benchmarks, establishing a new standard for state-of-the-art AI capabilities. The native multimodal architecture enables seamless cross-modal understanding that goes beyond what earlier approaches could achieve. The sparse MoE design delivers exceptional performance with computational efficiency, suggesting that Google has found new ways to scale AI capabilities without proportionally increasing costs. The 1M token context window opens new possibilities for complex tasks that require maintaining coherent understanding across vast amounts of information. The AI community’s enthusiastic response indicates this represents genuine progress rather than incremental improvement, and the excitement appears well-justified by the technical achievements demonstrated.

Community Resources

For those interested in exploring Gemini 3 Pro further, Google’s official blog provides detailed information about the model’s capabilities and design philosophy. The technical documentation available through the Gemini 3 Pro Model Card offers deeper insights into the architecture and training methodology. Developers can access the model through Google Vertex AI, with various pricing tiers available for different use cases. The X community continues to share experiences and discoveries under the #Gemini3Pro hashtag, providing real-world insights into the model’s strengths and limitations.

Final Thoughts

Gemini 3 Pro represents more than just another incremental improvement in AI capabilities. It’s a statement of intent from Google DeepMind that they’re not just competing in the AI race but aiming to lead it. The benchmark numbers are impressive, but what excites me most is the potential for what developers and researchers will build with these capabilities. From advanced reasoning to seamless multimodal understanding, Gemini 3 Pro provides tools that were science fiction just a few years ago.

As Sundar Pichai stated in the announcement, this is about “bringing any idea to life.” With Gemini 3 Pro, that vision feels closer to reality than ever before. The AI landscape just got a lot more interesting, and the next few months will reveal whether this release marks a temporary lead or a more fundamental shift in the competitive dynamics of frontier AI development.

Share with friends